gaussian-process-regression

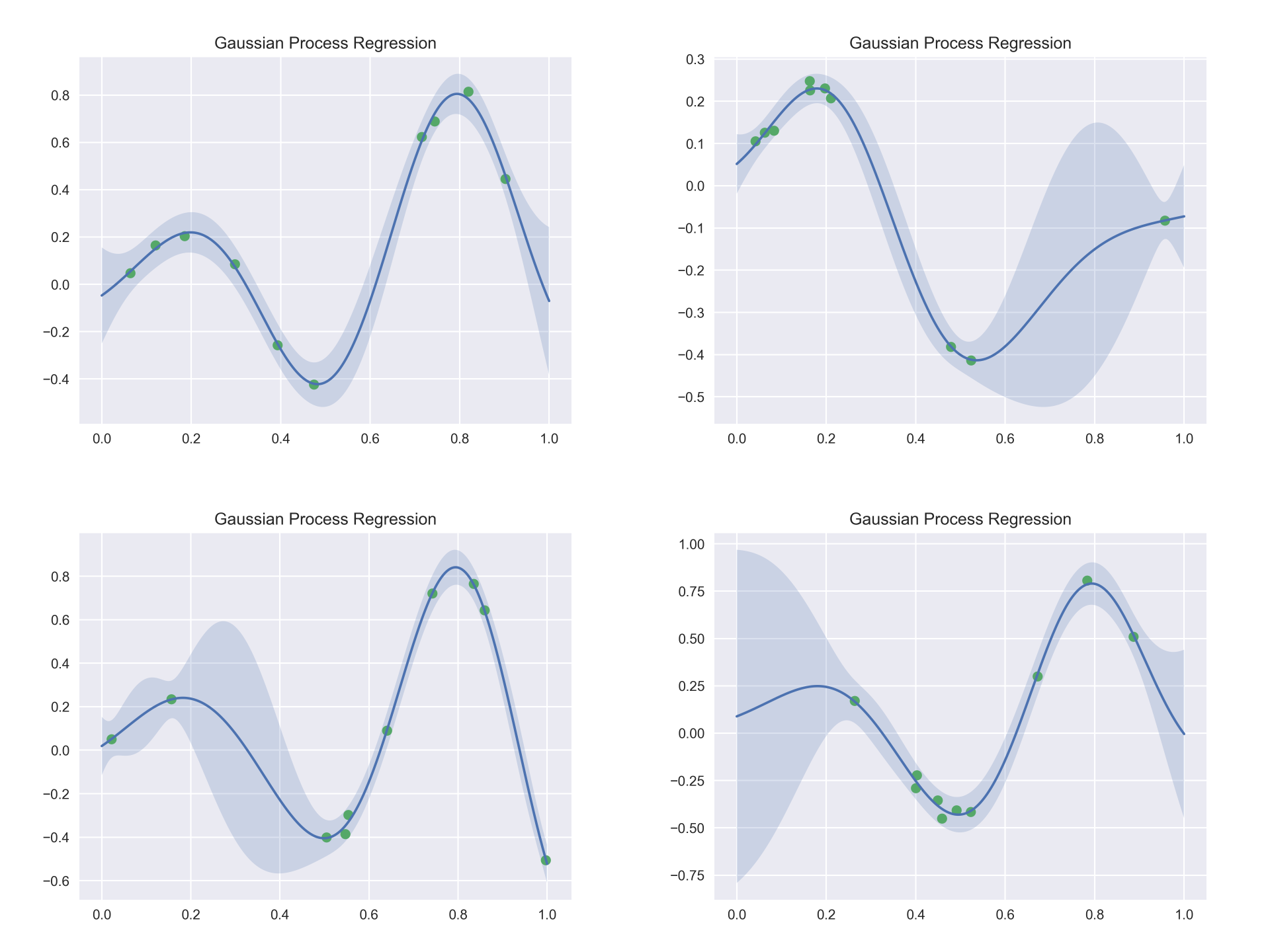

Gaussian process regression (GPR) for scattered data interpolation and function approximation.

Header

#include <mathtoolbox/gaussian-process-regression.hpp>

Internal Dependencies

Overview

Input

The input consists of a set of scattered data points:

where is the -th data point location in a -dimensional space and is its associated value. This input data is also denoted as

and

Here, each observed values is assumed to be a noisy version of the corresponding latent function value . More specifically,

where

(the noise variance) is considered as one of the hyperparamters of this model.

Output

Given the data and the Gaussian process assumption, GPR can calculate the most likely value and its variance for an arbitrary location .

The variance indicates how uncertain the estimation is. For example, when this value is large, the estimated value may not be very trustful (this often occurs in regions with less data points).

As the predicted value follows a Gaussian, its 95%-confidence interval can be obtained by .

Math

Covariance Function

The automatic relevance determination (ARD) Matern 5/2 kernel is the default choice:

where

and (the signal variance) and (the characteristic length-scales) are its hyperparameters. That is,

Mean Function

A constant-value function is used:

Data Normalization

Optionally, this implementation offers an automatic data normalization functionality. If this is enabled, it applies the following normalization:

where is an empirically selected scaling coefficient. This normalization is sometimes useful for the regression to be more robust for various datasets without drastically changing hyperparameters.

Selecting Hyperparameters

There are two options for setting hyperparameters:

- Set manually

- Determined by the maximum likelihood estimation

Maximum Likelihood Estimation

Let be a concatenation of hyperparameters; that is,

In this approach, these hyperparameters are determined by solving the following numerical optimization problem:

In this implementation, this maximization problem is solved by the L-BFGS method (a gradient-based local optimization algorithm). An initial solution for this maximization needs to be specified.

Usage

Instantiation and Data Specification

A GPR object is instantiated with data specification in its constructor:

GaussianProcessRegressor(const Eigen::MatrixXd& X,

const Eigen::VectorXd& y,

const KernelType kernel_type = KernelType::ArdMatern52,

const bool use_data_normalization = true);

Hyperparameter Selection

Hyperparameters are set by either

void SetHyperparams(const Eigen::VectorXd& kernel_hyperparams, const double noise_hyperparam);

or

void PerformMaximumLikelihood(const Eigen::VectorXd& kernel_hyperparams_initial,

const double noise_hyperparam_initial);

Prediction

Once a GPR object is instantiated and its hyperparameters are set, it is ready for prediction. For an unknown location , the GPR object predicts the most likely value by the following method:

double PredictMean(const Eigen::VectorXd& x) const;

It also predicts the standard deviation by the following method:

double PredictStdev(const Eigen::VectorXd& x) const;

Useful Resources

- Mark Ebden. 2015. Gaussian Processes: A Quick Introduction. arXiv:1505.02965.

- Carl Edward Rasmussen and Christopher K. I. Williams. 2006. Gaussian Processes for Machine Learning. The MIT Press. Online version: http://www.gaussianprocess.org/gpml/